ICML 2023 Men Also Do Laundry: Multi-Attribute Bias Amplification Interpretable metrics for measuring bias amplification from multiple attributes

Background and related work

As computer vision systems become more widely deployed, there is increasing concern from both the research community and the public that these systems are not only reproducing but amplifying harmful social biases. The phenomenon of bias amplification, which is the focus of our work, refers to models amplifying inherent training set biases at test time1.

There are two main approaches to quantifying bias amplification in computer vision models:

- Leakage-based metrics2, 3, measuring the change in a classifier’s ability to predict group membership from the training data to predictions.

- Co-occurrence-based metrics1, 4, measuring the change in ratio of a group and single attribute from the training data to predictions.

Existing metrics1, 4 measure bias amplification with respect to single annotated attributes (e.g., `computer`). However, large-scale visual datasets often have multiple annotated attributes per image. For example, in the COCO dataset5, 78.8% of the training set of images are associated with more than a single attribute (i.e., object).

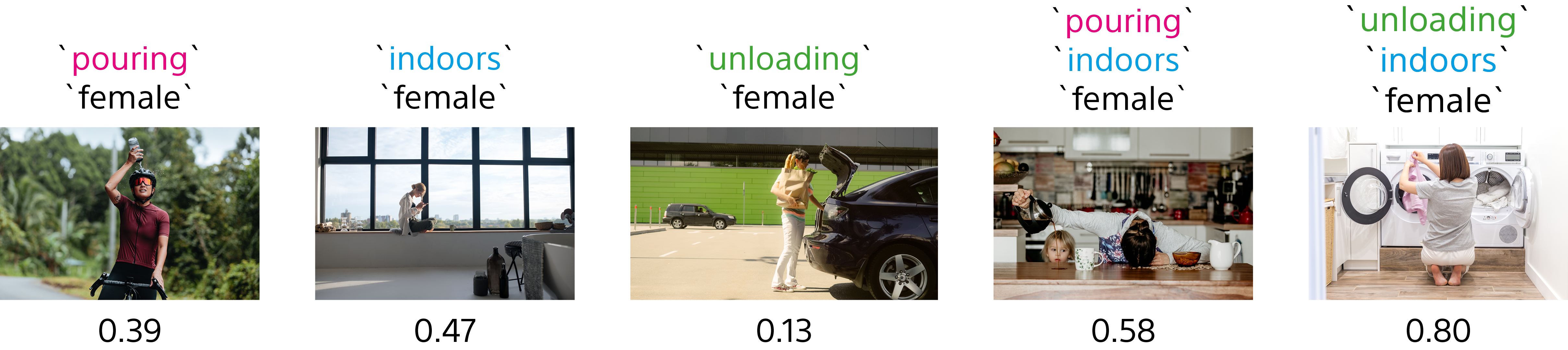

More importantly, considering multiple attributes can reveal additional nuances not present when considering only single attributes. In the imSitu dataset6, individually the verb `unloading` and location `indoors` are skewed `male`. However, when considering {`unloading`, `indoors`} in conjunction, the dataset is actually skewed `female`. Significantly, men tend to be pictured unloading packages `outdoors` whereas women are pictured `unloading` laundry or dishes `indoors`. Even when men are pictured `indoors`, they are `unloading` boxes or equipment as opposed to laundry or dishes. Models can similarly leverage correlations between a group and either single or multiple attributes simultaneously.

Key takeaways

We propose two multi-attribute bias amplification metrics that evaluate bias arising from both single and multiple attributes.

- We are the first to study multi-attribute bias amplification, highlighting that models can leverage correlations between a demographic group and multiple attributes simultaneously, suggesting that current single-attribute metrics underreport the amount of bias being amplified.

- When comparing the performance of multi-label classifiers trained on the COCO, imSitu, and CelebA7 datasets, we empirically demonstrate that, on average, bias amplification from multiple attributes is greater than that from single attributes.

- While prior works have demonstrated that models can learn to exploit different spurious correlations if one is mitigated8, 9, we are the first to demonstrate that bias mitigation methods1, 2, 10, 11 for single attribute bias can inadvertently increase multi-attribute bias.

Multi-attribute bias amplification metrics

We denote by and a set of group membership labels and a set of attributes, respectively. Let denote a set of sets, containing all possible combinations of attributes, where is a set of attributes and . Note if and only if in both the ground-truth training set and test set.

We extend Zhao et al.'s1 bias score to a multi-attribute setting such that the bias score of a set of attributes with respect to group is defined as: where denotes the number of times and co-occur in the training set.

Undirected multi-attribute bias amplification metric

Our proposed undirected multi-attribute bias amplification metric extends Zhao et al.'s1 single-attribute metric such that bias amplification from multiple attributes is measured: where and Here and denote an indicator function and the bias score using the test set predictions of and , respectively.

measures both the mean and variance over the change in bias score from the training set ground truths to test set predictions. By definition, only captures group membership labels that are positively correlated with a set of attributes, i.e., due to the constraint that

Directional multi-attribute bias amplification metric

Let and denote a model's prediction for attribute group, , and group membership, , respectively. Our proposed directional multi-attribute bias amplification metric extends Wang and Russakovsky's4 single-attribute metric such that bias amplification from multiple attributes is measured: where and Unlike , captures both positive and negative correlations, i.e., iterates over all regardless of whether . Moreover, takes into account the base rates for group membership and disentangles bias amplification arising from the group influencing the attribute(s) prediction (), as well as bias amplification from the attribute(s) influencing the group prediction ().

Comparison with existing metrics

Our proposed metrics have three advantages over existing single-attribute co-occurence-based metrics.

(Advantage 1) Our metrics account for co-occurrences with multiple attributes

If a model, for example, learns the combination of and , i.e., , are correlated with , it can exploit this correlation, potentially leading to bias amplification. By iterating over all our proposed metric accounts for amplification from single and multiple attributes. Thus, capturing sets of attributes exhibiting amplification, which are not accounted for by existing metrics.

(Advantage 2) Negative and positive values do not cancel each other out

Existing metrics calculate bias amplification by aggregating over the difference in bias scores for each attribute individually. Suppose there is a dataset with two annotated attributes and . It is possible that . Since our metrics use absolute values, we ensure that positive and negative bias amplifications per attribute do not cancel each other out.

(Advantage 3) Our metrics are more interpretable

There is a lack of intuition as to the “ideal” bias amplification value. One interpretation is that smaller values are more desirable. This becomes less clear when values are negative, as occurs in several bias mitigation works10, 12. Negative bias amplification indicates bias in the predictions is in the opposite direction than that in the training set. However, this is not always ideal. First, there often exists a trade-off between performance and smaller bias amplification values. Second, high magnitude negative bias amplification may lead to erasure of certain groups. For example, in imSitu, Negative bias amplification signifies the model underpredicts , which could reinforce negative gender stereotypes1.

Instead, we may want to minimize the distance between the bias amplification value and zero. This interpretation offers the advantage that large negative values are also not desirable. However, a potential dilemma occurs when interpreting two values with the same magnitude but opposite signs, which is a value-laden decision and depends on the system's context. Additionally, under this alternative interpretation, Advantage 2 becomes more pressing as this suggests we are interpreting models as less biased than they are in practice.

Our proposed metrics are easy to interpret. Since we use absolute differences, the ideal value is unambiguously zero. Further, reporting variance provides intuition as to whether amplification is uniform across all attribute-group pairs or if particular pairs are more amplified.

References

- J. Zhao, T. Wang, M. Yatskar, V. Ordonez, and K.-W. Chang, “Men Also Like Shopping: Reducing Gender Bias Amplification using Corpus-level Constraints,” in EMNLP, 2017.

- T. Wang, J. Zhao, M. Yatskar, K.-W. Chang, and V. Ordonez, “Balanced Datasets Are Not Enough: Estimating and Mitigating Gender Bias in Deep Image Representations,” in ICCV, 2019.

- Y. Hirota, Y. Nakashima, and N. Garcia, “Quantifying Societal Bias Amplification in Image Captioning,” in CVPR, 2022.

- A. Wang and O. Russakovsky, “Directional Bias Amplification,” in ICML, 2021.

- T.-Y. Lin et al., “Microsoft COCO: Common Objects in Context,” in ECCV, 2014.

- M. Yatskar, L. Zettlemoyer, and A. Farhadi, “Situation recognition: Visual semantic role labeling for image understanding,” in CVPR, 2016.

- Z. Liu, P. Luo, X. Wang, and X. Tang, “Deep Learning Face Attributes in the Wild,” in ICCV, 2015.

- Z. Li et al., “A Whac-A-Mole Dilemma: Shortcuts Come in Multiples Where Mitigating One Amplifies Others,” in CVPR, 2023.

- Z. Li, A. Hoogs, and C. Xu, “Discover and Mitigate Unknown Biases with Debiasing Alternate Networks,” in ECCV, 2022.

- Z. Wang et al., “Towards Fairness in Visual Recognition: Effective Strategies for Bias Mitigation,” in CVPR, 2020.

- S. Agarwal, S. Muku, S. Anand, and C. Arora, “Does Data Repair Lead to Fair Models? Curating Contextually Fair Data To Reduce Model Bias,” in WACV, 2022.

- V. V. Ramaswamy, S. S. Y. Kim, and O. Russakovsky, “Fair Attribute Classification through Latent Space De-biasing,” in CVPR, 2021.

Acknowledgments

This work was funded by Sony Research Inc. We thank William Thong and Julienne LaChance for their helpful comments and suggestions.